I do not work in software development; my focus is on the infrastructure and resources needed for deployments. AI and Chatbots are essential, motivating me to personally deploy one. I aim to do this on Windows Server 2025 without needing to pay for a subscription.

Table of Contents

Preperation

For this scenario I will be using a virtual machine on my Lenovo P520 CAD Workstation that I use for testing in my homelab. We are running a Windows Server 2025 virtual machine on a Windows Server 2025 Hyper-V host. If you want to setup such an environment, please check how to deploy a hyper-v host and a windows server 2025 in a homelab.

The server is a fresh installed Windows Server 2025 Standard server. It has been fully patched and my winget script for installing my default admin software i.e. sysinternals and my customized terminal has been applied.

What is Minoconda3 / Anaconda?

Miniconda is a free minimal installer for conda. It is a small, bootstrap version of Anaconda that includes only conda, Python, the packages they depend on, and a small number of other useful packages, including pip, zlib and a few others. You often see such requirements for solutions, that are cross plattform as they allow to utilize similar scripts and python.

What is Ollama?

Ollama is a project that enables the local execution of large language models, commonly referred to as AI Chatbots. It offers a command line interface and supports various language models. Our priority is its compatibility across platforms, including Windows Server 2025, as well as its active open source community. The community has developed a WebUi and a WebUi installer, both of which will be utilized in this tutorial.

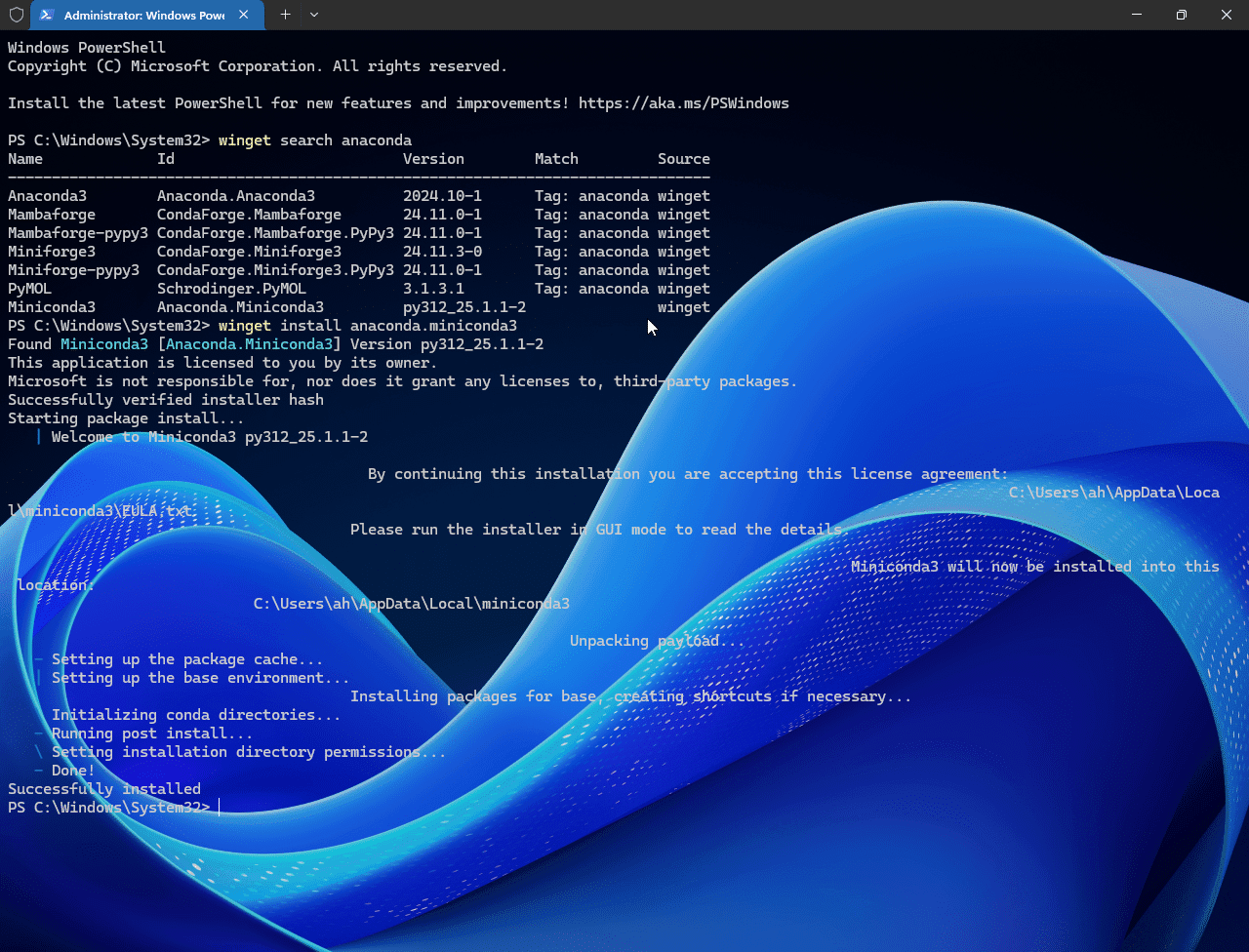

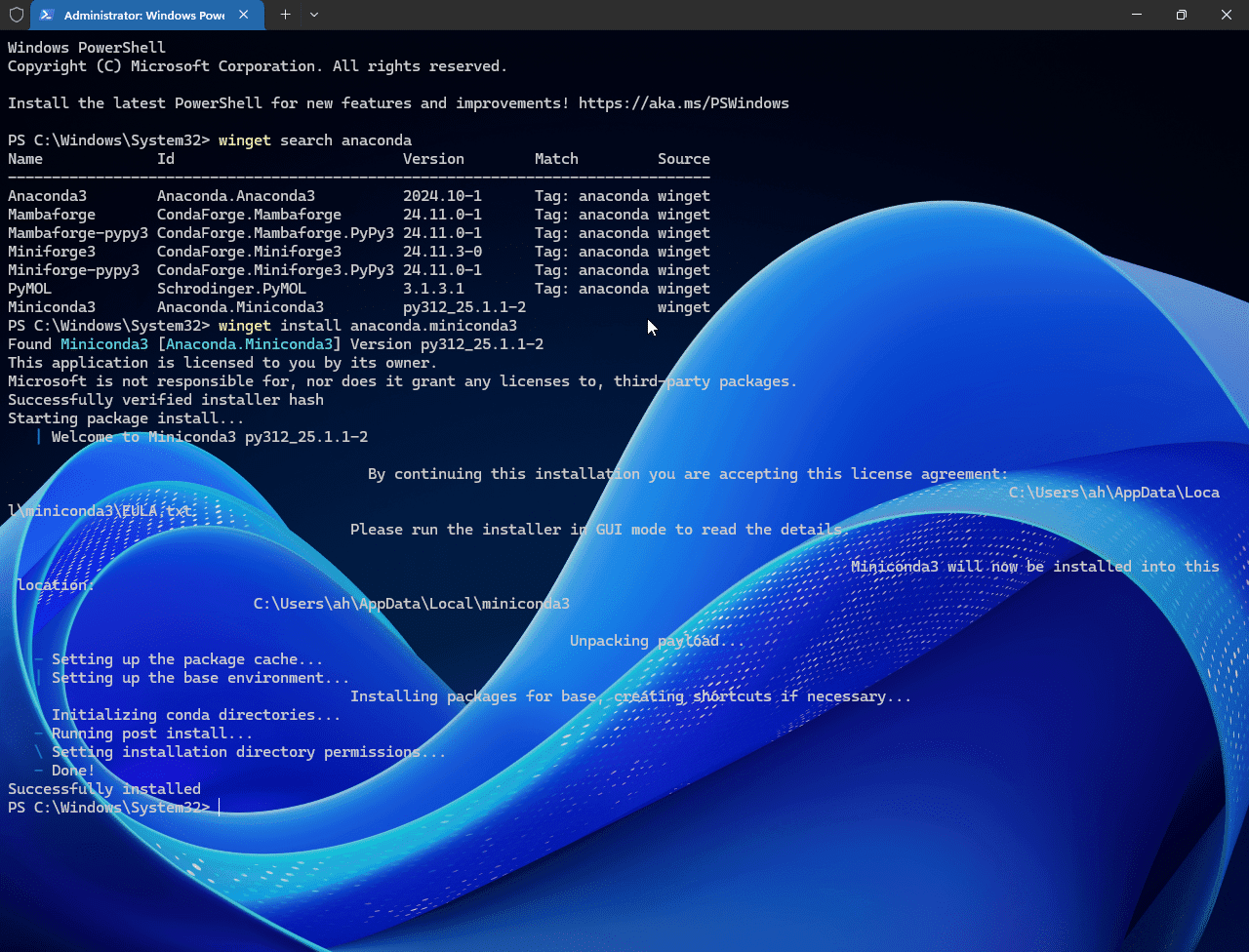

Install Miniconda3

We will use Winget for the installation of our software packages. Open the Windows Terminal as a local Administrator and run the following command.

winget install --id=Anaconda.Miniconda3 -e

Install Ollama

We will use Winget for the installation of our software packages. Open the Windows Terminal as a local Administrator and run the following command.

Install OpenWebUI

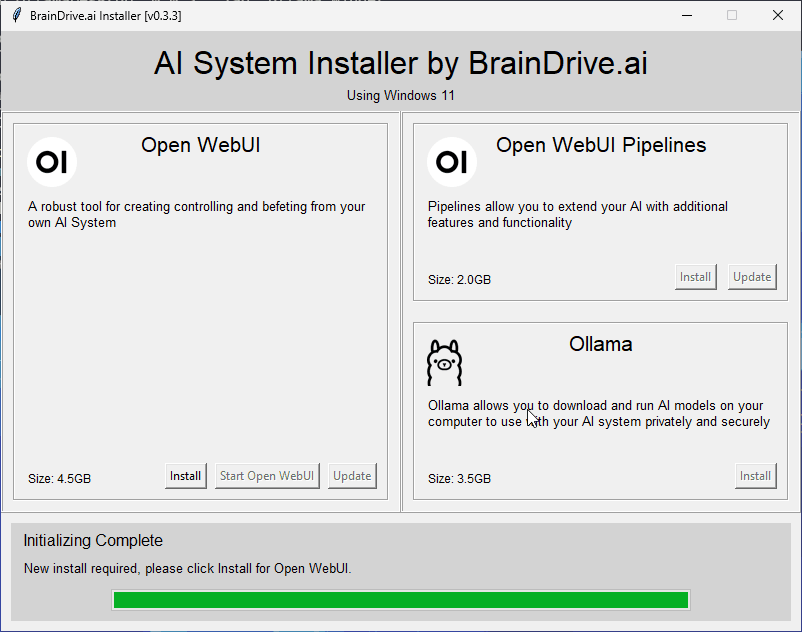

At the moment, this tool cannot be accessed through Winget; therefore, we need to visit the github repository to download and install it manually in the traditional way.

Go to https://github.com/BrainDriveAI/OpenWebUI_CondaInstaller/releases and download the tool.

Click on the Install Button and get ready for 10-20 minutes of installation time.

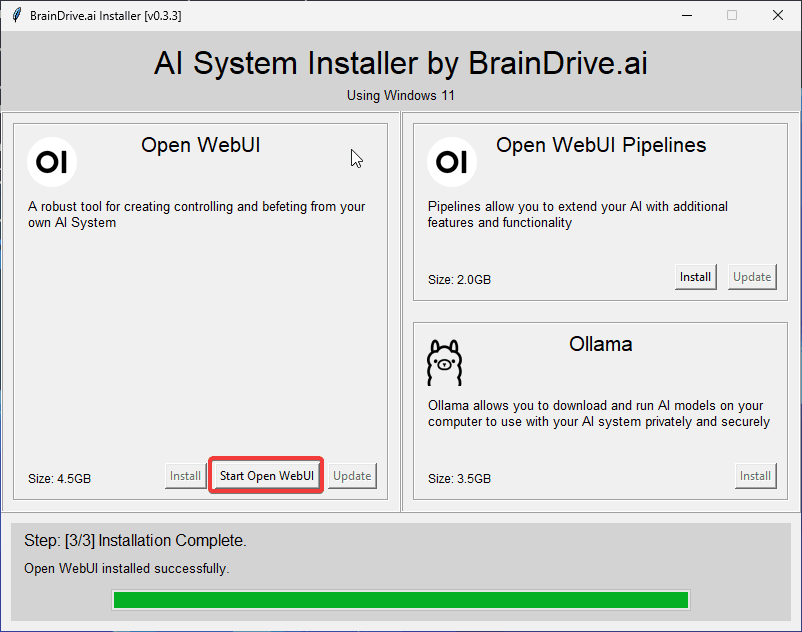

After you waited for quite a while you will get to the point where it finally completed. Now you can proceed and start the WebUi as shown in the screenshot below. Now you can again wait for a few minutes. If you want to read something interesting in between, please check my guide on becoming an IT architect.

When the webinterface is started your Edge Browser will start and let you enter your credentials.

When you need help with creating and managing your passwords, I do recommend keepassxc and have a written a guide for it.

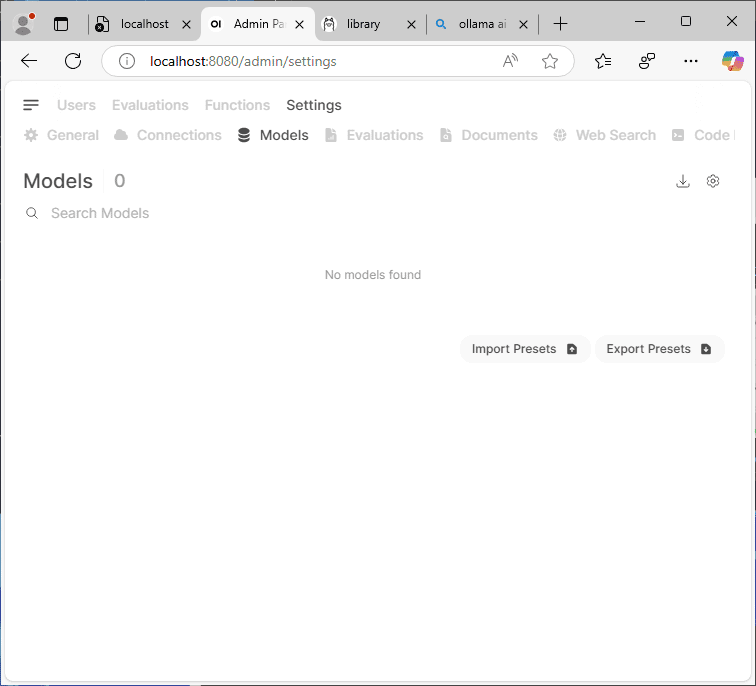

Configure your prefered LLM

For this step you need to decide on your large language model. You need to know the exact name and you can browse the options here: library

For our test I will be using the Microsoft model phi-4, which is linked here: microsoft/phi-4 · Hugging Face.

Be careful on what you choose as only smaller LLMs will run on consumer hardware with 16 / 32 or 64 GB of RAM.

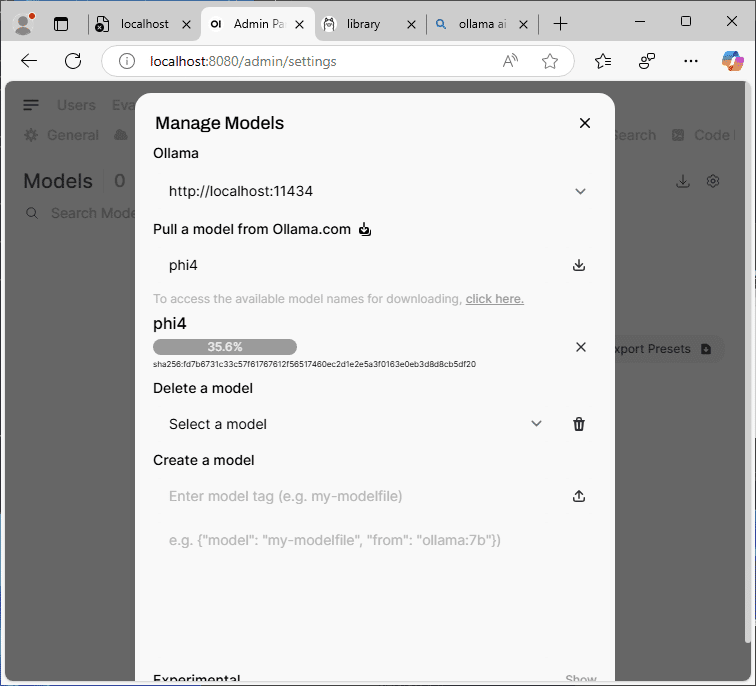

Click on your profile picture in the upper right -> settings -> admin settings -> models or you can open this URL directly: http://localhost:8080/admin/settings. From the admin settings open the modules option and click the tiny download button in the upper right.

Once again the download can take some time.

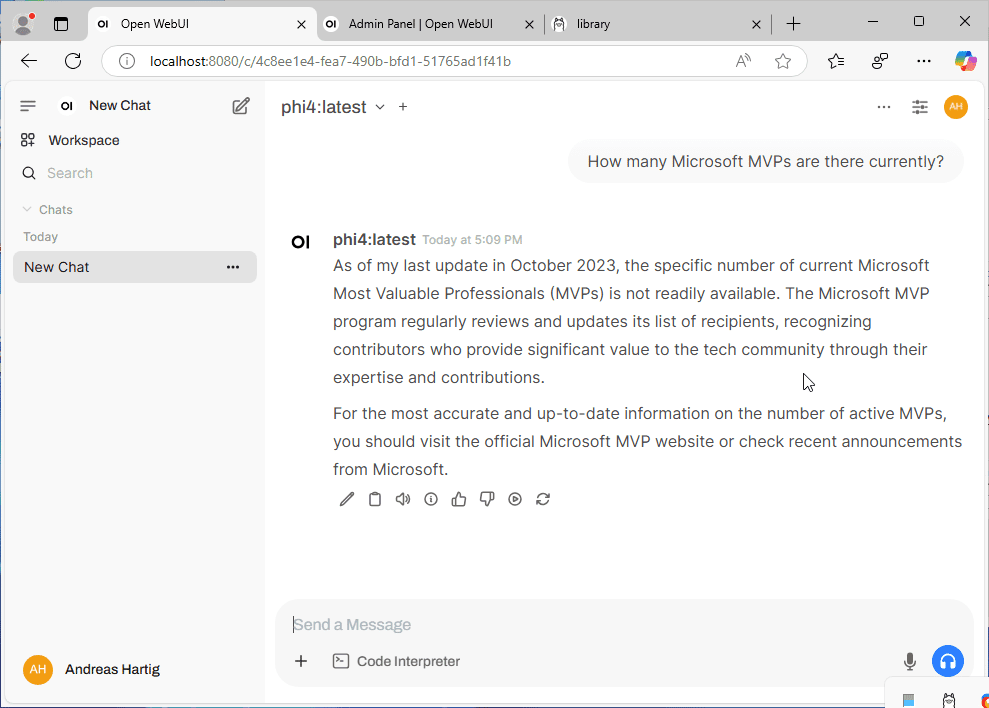

First Chat

When you return back to the start screen http://localhost:8080/ you can see the choosen language model in the upper left screen and ask your first question to your self hosted and self installed LLM / chatbot!

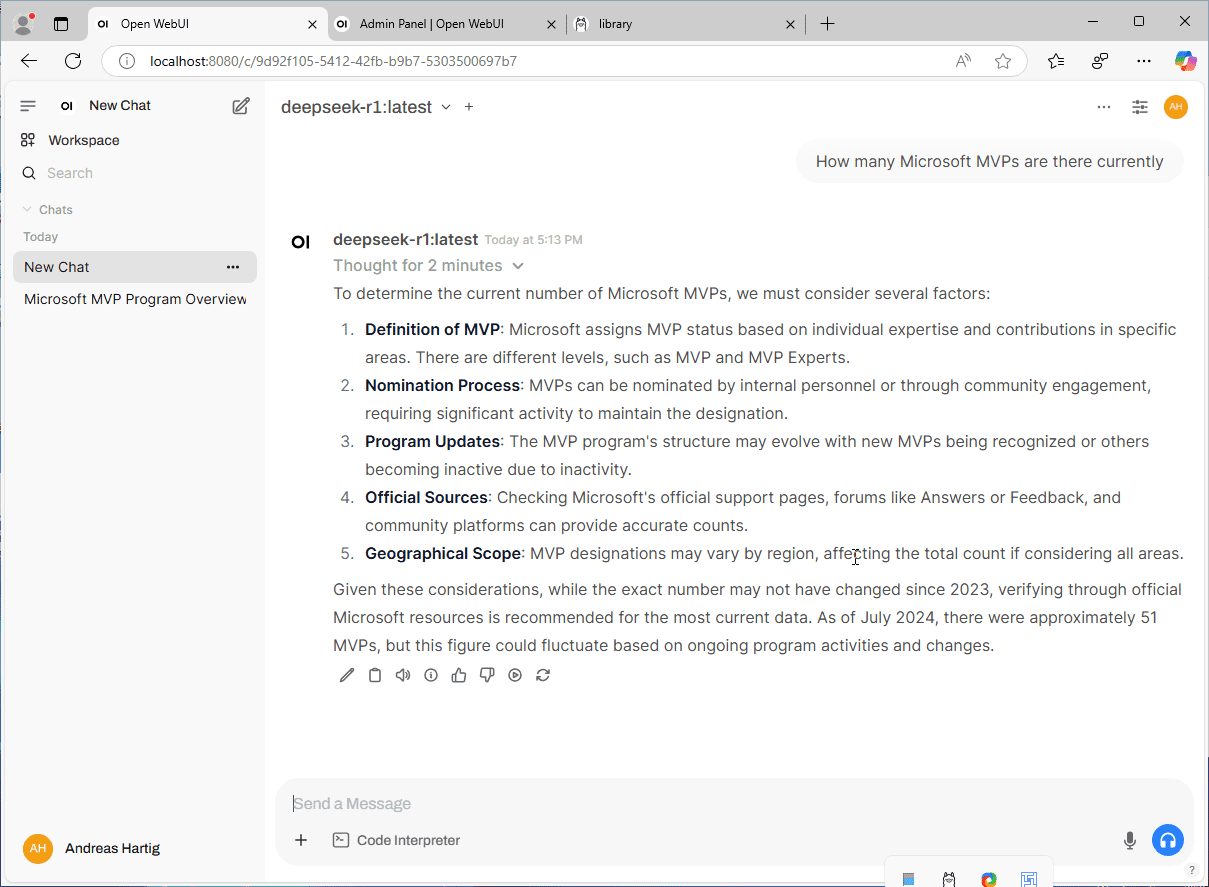

If you want to see how different the answers are, here is a reply from deepseek and you can immediatly tell, that it’s output was not good at all.

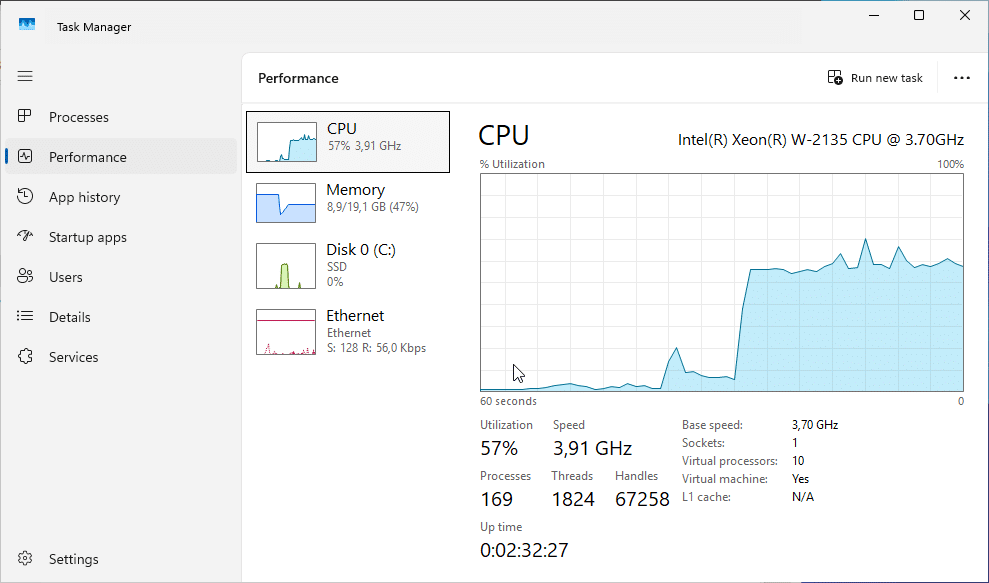

The deepseek reply took 1-2 minutes and below you can see how much my Lenovo P520 Workstation struggled.

The system does consume nearly all of the 10 cores from the Intel Xeon W-2135 CPU I assigned to this VM.

Reset the configuration

If you are documenting an installation and do forget a screenshot or you want to reset your environment, just search for the webui.db file. More details can be found here.

On my Windows Server 2025 default installation, the file was located here: C:\Users\%username%\OpenWebUI\env\Lib\site-packages\open_webui\data

Conclusion

This installation was based on the youtube video by Orin Thomas located here. I really appreciate the content created by him as I wasn’t able to find a good guide to run a local Chat Bot for my level of skills as a developer.

The test helped me better understand some components of the chatbot’s and I can start testing and playing around with it.